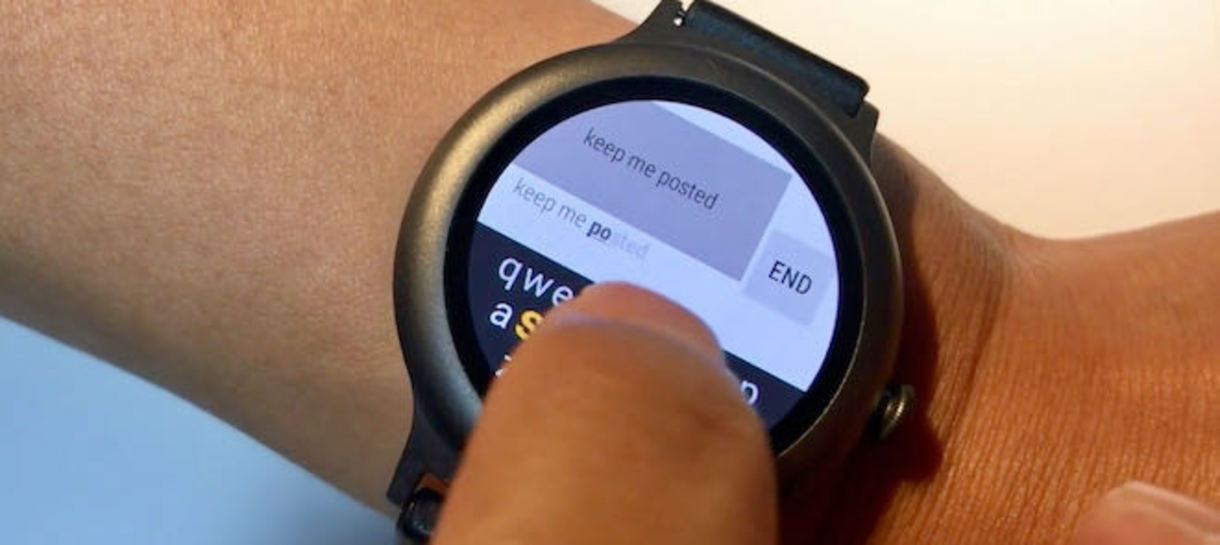

VHA (Visual Hints for Accurate typing) is a novel space-saving QWERTY-based soft keyboard for ultrasmall touch screen devices. It supports users to type more accurately and quickly with the efficient visual feedback integrated with autocorrection and prediction techniques that can run in real-time on a smartwatch.

- Ku Bong Min, Seoul Nationl University

- Jinwook Seo, Seoul National University

Typing on a smartwatch is challenging because of the fat-finger problem. Rising to the challenge, we present a soft keyboard for ultrasmall touch screen devices with efficient visual feedback integrated with autocorrection and prediction techniques. After exploring the design space to support efficient typing on smartwatches, we designed a novel and space-saving text entry interface based on an in situ decoder and prediction function that can run in real-time on a smartwatch such as LG Watch Style. We outlined the details implemented through performance optimization techniques and released interface code, APIs, and libraries as open source. We examined the design decisions with the simulations and studied the visual feedback methods in terms of performance and user preferences. The experiment showed that users could type more accurately and quickly on the target device with our best-performing visual feedback design and implementation. The simulation result showed that the single word suggestion could yield a sufficiently high hit ratio using the optimized word suggestion algorithm.

To be updated

Please visit our Github repository: https://github.com/min9079/VisualFeedbackKeyboard