- Jaemin Jo, Seoul Nationl University

- Sehi L'Yi, Seoul National University

- Bongshin Lee, Microsoft Research

- Jinwook Seo, Seoul National University

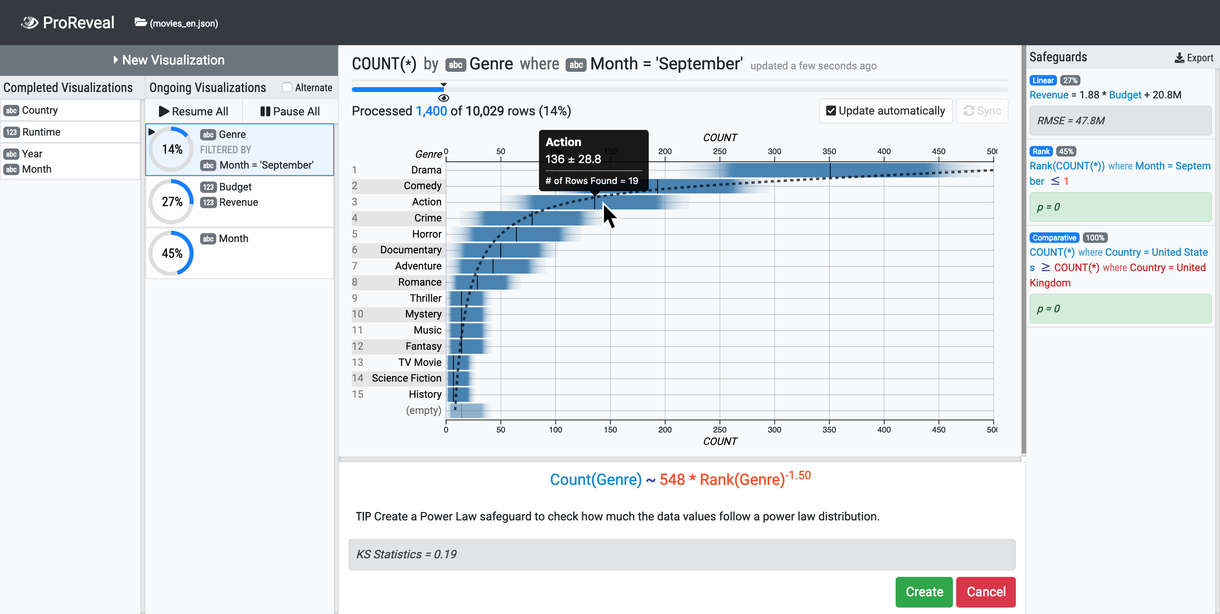

We present a new visual exploration concept—Progressive Visual Analytics with Safeguards—that helps people manage the uncertainty arising from progressive data exploration. Despite its potential benefits, intermediate knowledge from progressive analytics can be incorrect due to various machine and human factors, such as a sampling bias or misinterpretation of uncertainty. To alleviate this problem, we introduce PVA-Guards, safeguards people can leave on uncertain intermediate knowledge that needs to be verified, and derive seven PVA-Guards based on previous visualization task taxonomies. PVA-Guards provide a means of ensuring the correctness of the conclusion and understanding the reason when intermediate knowledge becomes invalid. We also present ProReveal, a proof-of-concept system designed and developed to integrate the seven safeguards into progressive data exploration. Finally, we report a user study with 14 participants, which shows people voluntarily employed PVA-Guards to safeguard their findings and ProReveal’s PVA-Guard view provides an overview of uncertain intermediate knowledge. We believe our new concept can also offer better consistency in progressive data exploration, alleviating people’s heterogeneous interpretation of uncertainty.

Please visit our Github repository.