- Hyeon Jeon, Seoul Nationl University

- Yun-Hsin Kuo, University of California, Davis

- Michaël Aupetit, Qatar Computing Research Institute, Hamad Bin Khalifa University

- Kwan-Liu Ma, University of California, Davis

- Jinwook Seo, Seoul National University

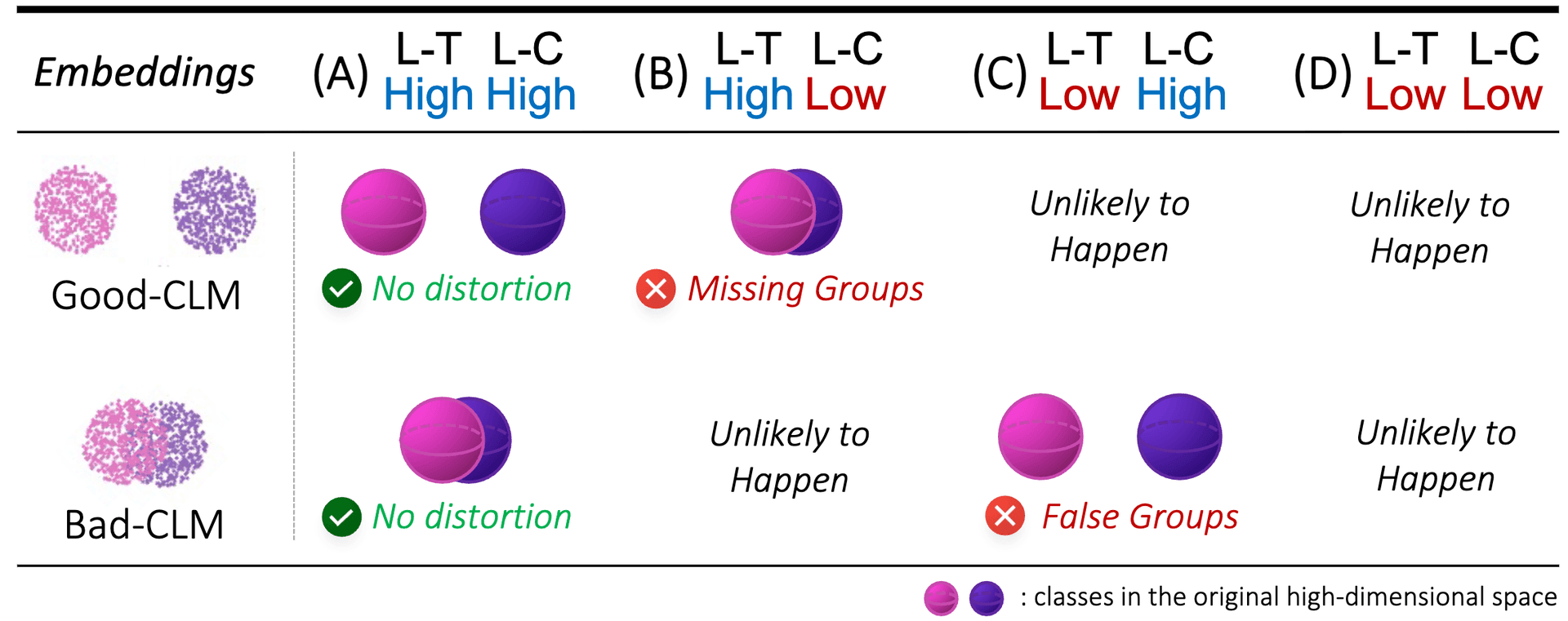

A common way to evaluate the reliability of dimensionality reduction (DR) embeddings is to quantify how well labeled classes form compact, mutually separated clusters in the embeddings. This approach is based on the assumption that the classes stay as clear clusters in the original high-dimensional space. However, in reality, this assumption can be violated; a single class can be fragmented into multiple separated clusters, and multiple classes can be merged into a single cluster. We thus cannot always assure the credibility of the evaluation using class labels. In this paper, we introduce two novel quality measures—Label-Trustworthiness and Label-Continuity (Label-T&C)—advancing the process of DR evaluation based on class labels. Instead of assuming that classes are well-clustered in the original space, Label-T&C work by (1) estimating the extent to which classes form clusters in the original and embedded spaces and (2) evaluating the difference between the two. A quantitative evaluation showed that Label-T&C outperform widely used DR evaluation measures (e.g., Trustworthiness & Continuity, Kullback-Leibler divergence) in terms of the accuracy in assessing how well DR embeddings preserve the cluster structure, and are also scalable. Moreover, we present case studies demonstrating that Label-T&C can be successfully used for revealing the intrinsic characteristics of DR techniques and their hyperparameters.

- TBA

- TBA

- TBA